Without a sense of touch, Frankenstein’s monster would never have realized that “fire bad” and we would have had an unstoppable reanimated killing machine on our hands. So be thankful for the most underappreciated of your five senses, one that robots may soon themselves enjoy. Facebook announced on Monday that it has developed a suite of tactile technologies that will impart a sense of touch into robots that the mad doctor could never imagine.

But why is Facebook even bothering to look into robotics research at all? “Before I joined Facebook, I was chatting with Mark Zuckerberg, and I asked him, ‘Is there any area related to AI that you think we shouldn't be working on?’ Yann LeCun, Facebook’s chief AI scientist recalled during a recent press call. “And he said, ‘I can't find any good reason for us to work on robotics,’ so that was the start of our FAIR [Facebook AI Research] research, that we're not going to work on robotics.”

“Then, after a few years,” he continued, “it became clear that a lot of interesting progress in AI work is happening in the context of robotics because this is the nexus of where people in AI research are trying to get to; the full loop of perception, reasoning, planning and action, and then getting feedback from the from the environment.”

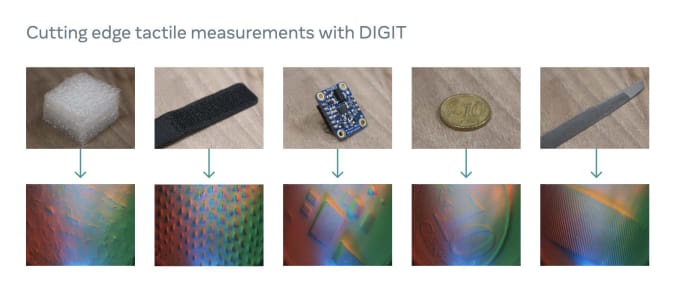

As such, FAIR has centered its tactile technology research on four main areas of study — hardware, simulation, processing and perception. We’ve already seen FAIR’s hardware efforts: the DIGIT, a “low-cost, compact high-resolution tactile sensor” that Facebook first announced in 2020. Unlike conventional tactile sensors, which typically rely on capacitive or resistive methods, DIGIT is actually vision-based.

FAIR

“Inside the sensors there is a camera, there are RGB LEDs placed around the silicon, and then there is a silicon gel,” Facebook AI Research Scientist, Roberto Calandra, explained. “Whenever we touch the silicone on an object, this is going to create shadows or changes in color cues that are then recorded by the collar. These allow [DIGIT] to have extremely high resolution and extremely high spectral sensitivity while having a device which is mechanically very robust, and very easy and cheap to produce.”

Calandra noted that DIGIT costs about $15 to produce and, being open source hardware, its production schematics are available to universities and research institutions with fabrication capabilities. It’s also available for sale, thanks to a partnership with GelSight, to researchers (and even members of the public) who can’t build their own.

FAIR

In terms of simulation, which allows ML systems to train in a virtual environment without the need to collect heaps of real-world hardware data (much the same way Waymo has refined its self-driving vehicle systems over the course of 10 billion computer generated miles), FAIR has developed TACTO. This system can generate hundreds of frames of realistic high-resolution touch readings per second as well as simulate vision-based tactile sensors like DIGIT so that researchers don’t have to spend hours upon hours tapping on sensors to create a compendium of real-world training data.

“Today if you want to use reinforcement learning, for example, to train a car to drive itself,” LeCun pointed out, “it would have to it would have to be done in your turn environment because it would have to drive for millions of hours, cause you know countless thousands of accidents and destroy itself multiple times before it burns its way around and even then it probably wouldn't be very reliable. So how is it that humans are capable of learning to drive a car with 10 to 20 hours of practice with hardly any supervision?”

“It's because, by the time we turn 16 or 17, we have a pretty good model of the world,” he continued. We inherently understand the implications of what would happen if we drove a car off a cliff because we’ve had nearly two decades of experience with the concept of gravity as well as that of fucking around and finding out. “So ‘how to get machines to learn that model of the world that allows them to predict events and plan what's going to happen as a consequence of their actions’ is really the crux of the problem here.”

Sensors and simulators are all well and good, assuming you’ve got an advanced Comp Sci degree and a deep understanding of ML training procedure. But many aspiring roboticists don’t have those sorts of qualifications so, in order to broaden the availability of DIGIT and TACTO, FAIR has developed PyTouch — not to be confused with PyTorch. While Torch is a machine learning library focusing primarily on vision-based and NLP libraries, PyTouch centers on touch sensing applications.

“Researchers can simply connect their DIGIT, download a pretrained model and use this as a building block in their robotic application,” Calandra and Facebook AI Hardware Engineer, Mike Lambeta, wrote in a blog published Monday. “For example, to build a controller that grasps objects, researchers could detect whether the controller’s fingers are in contact by downloading a module from PyTouch.”

Most recently, FAIR (in partnership with Carnegie Mellon University) has developed ReSkin, a touch-sensitive “skin” for robots and wearables alike. “This deformable elastomer has micro-magnetic particles in it,” Facebook AI Research Manager, Abhinav Gupta, said. “And then we have electronics — a thin flexible PCB, which is essentially a grid of magnetometers. The sensing technology behind the skin is very simple: if you apply force into it, the elastomer will deform and, as it deforms, it changes the magnetic flux which is read [by] magnetometers.”

“A generalized tactile sensing skin like ReSkin will provide a source of rich contact data that could be helpful in advancing AI in a wide range of touch-based tasks including object classification, proprioception, and robotic grasping,” Gupta wrote in a recent FAIR blog. “AI models trained with learned tactile sensing skills will be capable of many types of tasks, including those that require higher sensitivity, such as working in health care settings, or greater dexterity, such as maneuvering small, soft, or sensitive objects.”

Despite being relatively inexpensive to produce — 100 units cost about $6 to make — ReSkin is surprisingly durable. The 2-3mm thick material lasts for up to 50,000 touches while generating high-frequency, 3-axis tactile signals and while retaining a temporal resolution of up to 400Hz and a spatial resolution of 1mm with 90 percent accuracy. Once a swath of ReSkin reaches its usable limits, replacing “the skin is as easy as taking a bandaid off and putting a new bandaid on,” Gupta quipped.

FAIR

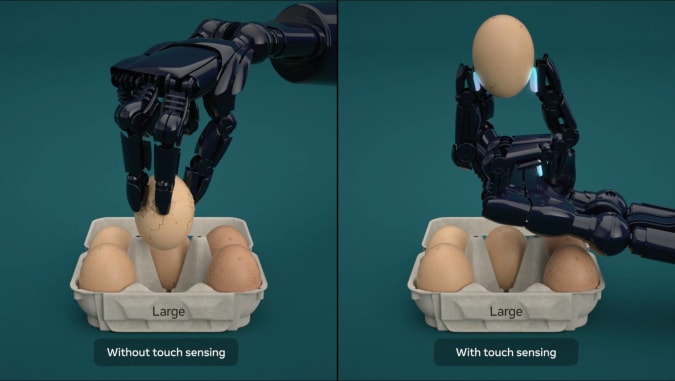

Given these properties, FAIR researchers foresee ReSkin being used in a number of applications including in-hand manipulation, ie making sure that robot gripper doesn’t crush the egg it’s picking up; measuring tactile forces in the field, measuring how much force the human hand exerts on objects it is manipulating, and contact localization, essentially teaching robots to recognize what they’re reaching for and how much pressure to apply once they touch upon it.

As with virtually all of its earlier research, FAIR has open-sourced DIGIT, TACTO, PyTouch and ReSkin in an effort to advance the state of tactile art across the entire field.

All products recommended by Engadget are selected by our editorial team, independent of our parent company. Some of our stories include affiliate links. If you buy something through one of these links, we may earn an affiliate commission.

Facebook is enabling a new generation of touchy-feely robots - Engadget

Read More

No comments:

Post a Comment